Abstract¶

‘Forensic metascience’ involves using digital tools, statistical observations or human faculties to assess the consistency of empirical features within scientific statements. Usually, those statements are contained within formal scientific papers.

A forensic metascientific analysis of a paper is the presentation of one or more observations made about features within that paper. These can be numerical, visual, or textual / semantic features.

Forensic metascientific analysis is designed to modify trust by evaluating research consistency. It is not designed to ‘find fraud’. While this may happen, it is not the sole focus of forensic metascience as a research area and practice, it is simply the loudest consequence.

Inconsistencies may be intentional or deliberate, deceptions or honest mistakes, but regardless they change the trustworthiness of a scientific claim. If, say, a key reference underlying a grant application lacks trustworthiness, it does not matter why this happened, it only matters that this reference is not relied on in isolation for further work.

Of course, the opposite is also true. When analysis determines elements within a paper are perfectly internally consistent, this should increase trustworthiness as well. This often happens during the analysis of well-reported, well-curated documents. Typically, these findings are much more interesting to private parties (universities, investors, funders) and less interesting to the scientific public.

A good forensic metascientist has learned the mechanics of the investigatory techniques outlined below — but this is not so challenging, as these are generally quite simple. More importantly, they have learned when each of them can be responsibly deployed to evaluate a paper. This requires more scientific literacy and good judgment than data analytic competency, programming skill, or innate talent.

With practice, a normative graduate student can become a capable forensic meta-analyst, and an experienced peer reviewer or statistician can become an excellent forensic meta-analyst. However, the best analysts are stubborn, curious, and detail-oriented, regardless of their background or experience.

The following is a guide to learning many of the available techniques in forensic metascience that have a stated quantitative approach in the tradition of Knuth’s literate programming. All code is given in R.

This is designed to be a living document, and as new techniques are developed and validated, they will be added in future defined and dated editions. A minimal level of statistical knowledge is assumed, approximately an intermediate college level of applied statistics course. Sections that do not have accompanying code are assumed trivial, but could be added on request. Previous versions and rollbacks will be available as editions proceed. Please flag any potential inclusions, errors, code modifications, etc. to james(dot)heathers(at)protonmail(dot)com.

One planned future addition is testing and background material which will more readily allow all of the following to be used in teaching courses.

This project was primarily completed from September through December 2024 thanks to the generous support of the Bill and Melinda Gates Foundation.

Citation:

Heathers, J. (2025) An Introduction to Forensic Metascience. www

Why We Analyze Papers¶

Forensic metascientific analysis is specialized, time-consuming, and — if paid for — expensive. It is sufficiently inaccessible that only a select few papers are ever analyzed.

Having established the general aim of analysis (to modify the trustworthiness of scientific work through the pursuit of consistency), there are several reasons a paper may be subjected to analysis:

- A key citation. In the preparation of a grant or paper, there may be results cited which are crucial to establishing a rationale. It is important that such papers contain no provable inaccuracies. In the absence of re-analyzable data, code, or other study materials (which are typically either not available or slow to access), forensic analysis can be useful.

- A flag noticed in casual reading. In general scientific reading, an incongruent or problematic feature might be noticed (see ‘Flags’ below). Sometimes these can be quite obvious. If present, a ‘flag’ dramatically increases the likelihood an analyst will find additional problems. A flag can be anything: for instance, if the mean is reported as two different values across the whole paper; if a statistical test is clearly wrong, or out of range (i.e. F=200, d=4.5, or similar); if there is noticeable a mismatch between test statistics and p-values, etc. The list of flags is quite long, and some are outlined below.

- A diligence process. A university, venture capital firm, funding body, grant committee, or other interested party may require documents to be analyzed for accuracy. The reasons for wanting this are obvious — most commonly, the documents might affect an funding, investment, or hiring decision.

- A ‘report’. Forensic metascientists often act in a consultant role to domain experts, and a ‘report’ is simply a flag that is noticed by someone else and passed to an analyst. These are often more sophisticated than regular flags, as they are features being noticed as incongruent by someone with specialized knowledge. An analyst can often take such a report and work responsibly in unfamiliar scientific areas. Reports are a common reason that a forensic meta-analysis is conducted.

- A research interest. Data within a paper may be wanted for meta-analysis, mega-analysis, or other form of re-analysis. Some forensic techniques provide reconstructed data which can be used to test different hypotheses, or provide Monte Carlo estimates, or provide comparison points for other datasets, etc.

- Author history. In the interests of pursuing general scientific accuracy, analysts often work on papers of authors who have already shown themselves to have published inaccuracies elsewhere.

- Just because. Science is supposed to involve in-depth scrutiny, and scientists are supposed to be curious. Analysts often become interested in ‘the details behind the details’ in their own right.

- To extend forensic metascientific techniques. Sometimes to investigate a certain test or data feature, the only requirement for analysis is that a paper use that test or feature.

Flags¶

What is a flag?¶

Forensic metascience techniques are most commonly deployed on a document which becomes a target paper after a flag is identified — generally, flags are the typical starting point of an analysis. As above, a flag is simply any visible incongruity:

- A data feature that seems statistically unlikely or unusual (for instance, an effect size of d=4 in a treatment effect you would expect to be less substantial)

- A data feature that is missing (for instance, a whole-sample n is given, but no cell sizes)

- A data feature that seems odd in the context of a field (for instance, an anemia rate in a wealthy country that is over 40%)

- An unusual method for the presentation of data (for instance, using median [IQR] and ordinal analysis for data that is always described in other equivalent papers as normally distributed)

- A paper with strange forensic scientometric features, or unusual language (for instance, a paper that has a block of 12 citations to the same author group inserted at random, or the text including an AI prompt that says ‘Generate response’!)

- Even spelling or referencing mistakes, if they are in a crucial portion of the paper which you would expect to be heavily scrutinized

- Any other unusual, repeated observation — after spending some time in any individual research area and uncovering some incongruities, you will be able to generate categories of your own.

Flags can come from anywhere. Specifically:

- An analyst may regard a journal, author, or combination of authors as suspicious (for instance, when the paper is authored by a group who have several Pubpeer.com entries), and be reading on that basis

- An analyst may notice a flag during general reading

- An analyst may uncover a flag after initial analysis

- An analyst may receive a paper from a domain expert, someone who understands the field very well and is more capable of noticing incongruities (as above, these are ‘reports’, and are just flags from someone who knows more than you do)

- An analyst may discover a flag elsewhere (for instance, a suspicious clinical trial registration) which leads back to a paper published on the data it described

Having discovered a flag, this leads to triage.

Flags and their place in triage¶

A forensic meta-analyst has two jobs:

(1) assess the details presented within a target paper to investigate its trustworthiness, as above; but also

(2) protect their time

Triage is extremely important, because triage protects time.

Choosing what to work on is just as important as learning how to deploy the analysis methods.

Like analysis itself, triage is a skill that develops with intuition and experience.

A practically infinite number of papers exist, and a practically infinite number of potential analyses can be deployed on them, and within these endless opportunities, we have a task where it is easy to become hyper-focused, curious, or to switch from a chosen analysis path in order to establish a new observation.

Combined, these two factors are fatal to the timely completion of analysis.

As a consequence, an informal system of managing analysis has evolved. The one outlined here is not the only one possible! Whether the following is executed precisely to the letter is less important than (a) that you use a formal triage system if conducting the work in a professional capacity, and (b) that you follow it, while continuing to develop it.

A potential triage system has four stages:

(1) Reading¶

Reading leads to flags (see above), and flags lead to…

(2) Initial analysis¶

Initial analysis is the first step of using the techniques outlined in this document to analyze parts of the target paper. In general, it is not required to keep notes, nor make a record of analysis pathways. Analysis can be haphazard or systematic, depending on the circumstances (maybe only a few things are very slightly suspicious, for instance). If initial analysis reveals one or more problems that warrant further investigation, it leads to…

(3) Formal analysis¶

Formal analysis takes longer because it involves the presentation of all elements within a target paper in a separate document. All in this case really does mean all — trustworthy or not, analyzable or not, every analysis should be included alongside any code, technique, etc. with findings. This is often the end of an investigation — formal analysis on a single paper, for instance, would be what a journal editor suspicious of a result in their journal would expect. But if formal analysis is in service of a broader goal, it leads to…

(4) Scouring¶

Scouring is the meticulous coverage of all relevant elements beyond simply a paper, including but not limited to two or more papers, a whole journal issue, a whole grant application or proposal, a whole career. Essentially it is a series of formal analyses with a theme. Scouring can take a long time. It is usually boring and — if it is being funded — expensive. You would need an exceptionally strong reason to scour the entire publication record of a single prolific researcher. Scouring an author’s entire corpus of work can take years, and thousands of hours of analysis time. This requires, more than anything else, incredible patience and steadfastness. As a consequence, it is also reasonably uncommon.

In short: it is usually best practice to decide in advance how much work you will do on any given paper, or for any given task, or for any given contract. You will never have the time to throw the analytical kitchen sink at every paper in front of you.

An Introduction to Techniques¶

Having found a flag, or perhaps requiring that a single chosen document be checked for accuracy without one, an analyst proceeds to deploying the available data analytic techniques on a paper.

Unfortunately, many papers actively resist this. For instance:

- It is very hard to analyze a systematic review beyond the time-honored technique of ‘reading all the cited papers, and making a determination of whether or not they are accurately represented’.

- Likewise, opinion pieces, viewpoints, qualitative research, etc. — these all lack the necessary features to analyze.

- Commonly, some more technical fields may present data too complicated or esoteric to analyze.

- More commonly still, papers that are poorly reported do not support analysis very well, because there is very little information available — writing a poor quality paper is actually a viable defense against that paper being checked!

- Some data types are present summary statistics that make for tricky inferences. For instance, survey items that are made up of many subscales with unusual or non-standard combinations of items.

That being said, many of them do allow this. For those, we have this guide.

For the tests that I have personally contributed to, I maintain a website with information and resources about all the tests you can access here. Future editions of this document may include dramatically expanded external resources.

Principles to keep in mind¶

- It’s OK to get help. Many analysts do not work alone, but in small teams (2 or 3 would be normal). The workflow in this environment usually consists of: (1) working individually on separate observations, and then presenting them to others for checking; or (2) working collaboratively on the same codebase, document, analysis body, etc. simultaneously; or (3) recreating the same analysis of the same document while mutually blinded. Teamwork is the first and best protection against raising unfounded points of inconsistency, and the fact you have to immediately convince another skeptic will dramatically affect your confidence. This is a good thing — it is supposed to do that. It is generally a bad idea to work by yourself and then release analysis publicly, until you are experienced. Unfounded observations are unfair to authors, personally and legally dangerous to the analyst, may waste journal and/or university time, and reduce the trustworthiness of forensic metascience as a whole.

- It’s OK to be frustrated. Out of a basket of randomly chosen papers, how many of them can be analyzed? There is no good estimate at present, but a bad estimate would be: about half. If you wish to analyze a paper but conclude ‘there is nothing I can responsibly analyze here’, it is understandable to be disappointed or frustrated sometimes. Likewise, if an analyst would like to see the raw data behind a paper to confirm it, it will usually not be available. Likewise, if an analyst takes findings to an academic journal, government body, or university, there may be a complete lack of interest shown in what you’ve found. This is simply part of the work. It is inevitable and unavoidable. All you can do is make your case, and move on.

- Indeterminate answers are common. Often you analyze a table or a series of numbers, and you don’t have a concrete answer to the question: “Is this data inaccurate?” The data may be impossible, incredibly unlikely but technically possible, or merely just somewhat strange — and you may not be able to determine which of those options it is. Again, this is part of the experience. And, having found an inaccuracy, you may be entirely unable to determine what caused it.

- Forensic metascience can scare people. Most researchers or other research-affiliated people have not heard of what you are about to learn to do. Many people find the experience of being presented with a forensic meta-analysis rude, frightening, or confusing, especially if they are not statistically minded. So, present data and findings straightforwardly, and with the maximum amount of explanation necessary. Resist the temptation to write angry emails in all-caps. Do not copy a dozen uninterested third parties into those emails. If you act like a crank, you will be treated as one.

Data Techniques¶

Simple numerical errors¶

Given that scientific papers can have hundreds or thousands of individual details typed into a narrative structure, numerical errors are understandable.

However, given scientists have access to free, robust, controllable data environments, numerical errors can also be suspicious and dramatically reduce trustworthiness — especially if those errors are favorable to the hypotheses of interest, repeatedly expressed, or accompanied by other incongruities.

The below are some examples of canonical errors which do not need accompanying code or longer explanations.

Summation¶

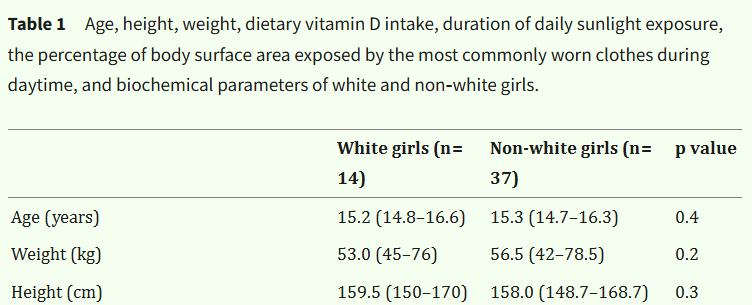

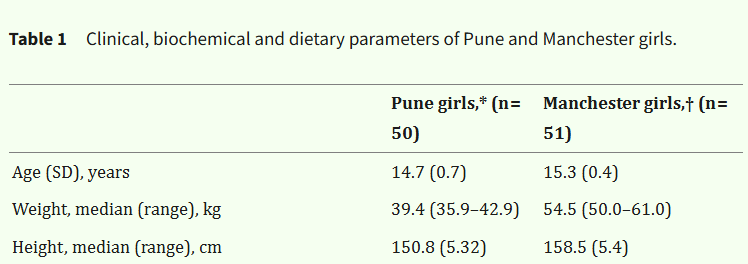

Khadilkar et al. (2024) conducted an RCT supplementing diabetic and underprivileged children with vitamin-D. In the introduction, the paper states:

“Methods: 5 to 23 year old (n = 203) underprivileged children and youth with T1DM were allocated to one of three groups”

Then, later, you see:

“A total of 203 participants were enrolled in the study, including 99 boys (48.8 %) and 104 girls (52.2 %).”

48.8% plus 52.2% is 101%. As the cell sizes are provided, we can immediately ascertain that the figure for girls should be 51.2% (104/203).

This is most likely a simple typo, but it is also a flag. (The paper has 14 authors. Did no-one notice?)

Flags like this are commonly noticed during general reading or during initial analysis (that is, after other flags have made you more interested in a paper). Intuition for this elementary arithmetic develops quickly.

Consistency¶

Just as easy as the above (but sometimes more of a problem for the authors!) are numerical consistency errors. For instance, Alalfy et al. (2019) investigated the effect of different perioperative wound closure techniques, and reported:

“The age of patients included in this research was between 25 and 35 years, with BMI > 30 (so, we do not have a group of women with BMI below 30 as our focus in the present research was to assess the obese women)... The following patients were excluded women with BMI < 30”

However, the same sample is later described in Table 2:

BMI: range 23.0–45.0, mean=34.5 ± 3.6, median=34.0

These cannot both be true simultaneously. This represents an elementary mistake, but quite a bad one. The three options are, as they often are:

- The first detail is wrong

- The second detail is wrong

- Both are wrong

In this case, it is likely to be both: the rest of the paper is saturated with a variety of other errors. If you would like to read a full analysis of that paper, it can be found here.

Sample and cell size mysteries¶

During analysis, it is good practice to highlight or separately write down figures like the above, so they can be compared later.

While this is simple in theory, it can involve a lot of individual details. For example, here are the analysis notes I took for Ballot et. al. (1989) “Fortification of curry powder with NaFe(111)EDTA in an iron-deficient population: report of a controlled iron-fortification”.

(This was from the Initial Analysis phase, after the publication was flagged because the paper never reported the initial cell sizes. My later notes are in brackets with JH appended.)

264 families comprising 984 individuals

Children aged < 10 y were excluded

Forty-five individuals with a hemoglobin (Hb) of 90 g/L were … excluded from the study.

(JH: ie. n=939)

Power calculation: “This gives a sample size of 142 in each group and sex with a total sample size of 568.”

(JH: groups are fortified/not fortified and men/women. Study oversampled to meet power threshold.)

The 264 families were divided by use of computer-generated random numbers into

fortified (135 families) and

control (129 families) groups

After 2 y of fortification, 672 subjects remained in the study.

A total of 267 subjects dropped out of the study:

129 moved away from the area,

115 refused to participate further,

and 23 died.

(JH: 672+267 = 939, OK)

(JH: 129+117+23 = 267, OK)

No significant differences in the number or category of dropouts between the fortified and unfortified.

Table 2 n's: 161+164+139+134 = 598 (JH: ?)

Table 3 n's: 127+139+124+115 = 505 (JH: ?)

Table 4 (female fortified, above n=139):

Adding up the various subgroups

70+29+15+32 = 146 (+7)

126+29 = 155 (+16)

51+49+53 = 153 (+14)

53+65+34 = 152 (+13)

What's going on??As per the final plaintive line above: there are plenty of incongruities here.

Totals and subtotals, which give way to group/cell totals and subtotals, are often poorly reported because authors often do not think including how much data was lost, excluded, or conveniently forgotten at every level is something anyone else will care about. Analysts do, of course, and the inability to correctly report cell and sample sizes may change our opinion of the author/s.

There are two primary frustrations with errors of addition such as these: (1) when you stack a few of them together, poorly reporting a portion of a portion of a sample and not recording data exclusions at any point, it is often impossible to reconstruct the relevant cell sizes, and (2) it’s typical that we don’t get to know why this data reporting is so haphazard, because authors rarely return requests for data or clarification. In a paper such as this, published in 1989, we’ll never see that data for another more simple reason — it is lost. All we get to know is: there are many un-reported exclusions, and a lot of data missing in a way that is haphazard and unpredictable.

A series of details like this should be classified as concerning rather than fatal to the hypotheses of interest. Older papers were often published when there were different data reporting standards! But having found these features in an initial analysis, we’d typically analyze the paper further — and that’s exactly what we’ll do here, further down in ‘GRIM: Reconstructing means’.

Confusing SD and SE¶

This specific error in data reporting is so common it gets its own category. There is a long and unfortunate history of researchers confusing these two related metrics.

The standard error of the sample mean depends on both the standard deviation and the sample size, by the simple relation SE = SD/√(sample size). The standard error falls as the sample size increases, as the extent of chance variation is reduced—this idea underlies the sample size calculation for a controlled trial, for example. By contrast the standard deviation will not tend to change as we increase the size of our sample. Altman and Bland (2005)

This simple statistical note outlines everything you need to know about the error, and the below illustrates how (alarmingly!) common the error can be.

Thirty-five (40%) of the 88 studies that reported means along with a measure of variability reported the standard error of the mean instead of the standard deviation. The standard error describes the precision with which the sample mean estimates the true population mean but does not provide direct information about the variability in the sample. Because the interpretation of the standard error is different from that of the standard deviation, it is critical to indicate which summary is reported. Olsen (2002)

If you are re-creating statistical tests (see the below sections), and it feels like nothing works, check to see if the authors have confused SD and SE. Bear in mind the statistics could be correct but the reporting of the values incorrect, or vice versa, or some combination.

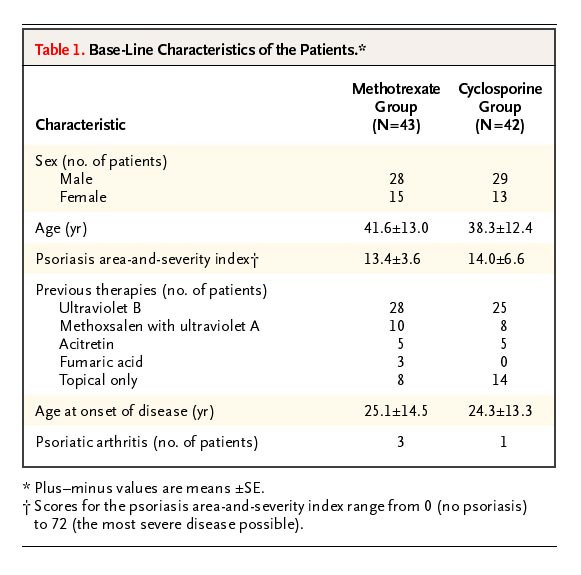

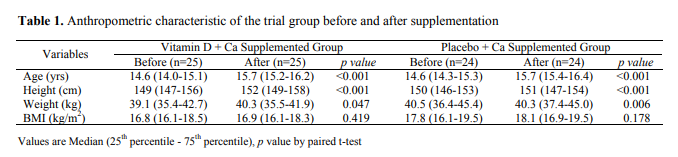

Here is a straightforward example:

This paper compares two treatment regimes for psoriasis. How should we make the determination that SE is written but the authors actually meant SD? One way is to reconstruct the data (see SPRITE, below) and sanity-check it. You can also calculate a statistical test (a between subjects t-test would be fine, for instance) and determine if the output is plausible.

But, more straightforward than either of those: simply convert the values (SD to SEM, or vice versa) and see if the conversion is viable.

## the sample sizes

n1 <- 43

n2 <- 42

## the supposed SEs

listed_SE1 <- 13

listed_SE2 <-12.4

## calculate the implied SDs

SD1 <- sqrt(n1)*listed_SE1

SD2 <- sqrt(n2)*listed_SE2

SD1

SD2

## OUTPUT

> SD1

[1] 85.2467

> SD2

[1] 80.36118No sample of ages in human history has ever had an SD of 80 or 85! Ages cannot vary that much, even with an incredibly strange sample of newborns and centenarians.

This method requires you to use reasoning, but very little. This might become more challenging if the result published is obscure (reasoning around ‘the potential ages of participants in a study’ is easy to determine, ‘the expected lifespan of genetically diabetic-obese (db/db) mice with a leptin gene mutation’ requires a bit more knowledge.)

But even if some local knowledge is required, you can usually just re-calculate the potential alternative value and determine something is amiss. The same applies to confusing SE for SD (i.e. the reverse of the above).

While this is a simple error — simple to make, simple to find, simple to fix — whole papers have previously been shown to be completely meaningless due to this oversight. Happily for the authors, (a) this is usually an honest mistake of statistical ignorance, not anything that implies a problem with research integrity, and (b) this means the paper can be corrected or re-published with the right values in place.

From the perspective of the analyst, this obviously means the relevant results in the paper are not trustworthy. However, there is the strong likelihood that you can calculate the correct results without consulting the authors.

The ’quick’ SD check¶

Of all the heuristics an analysts develops when looking for flags in a hurry, one of the most useful (and the most certain) concerns standard deviations presented in text.

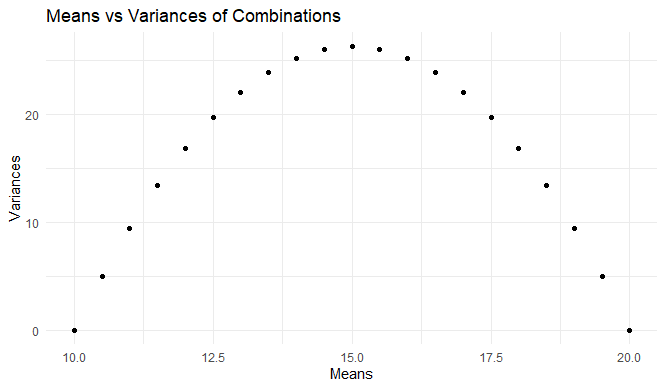

Let’s say we only know the min (10) and max (20) of a sample (n=20). What’s the maximum variance that sample can have?

The answer is: the points of maximum variance for any given mean are defined by all the samples made up out of [10,10,10,…20,20,20]. Consequently, the means vary from just above 10 (all 10s except one 20) and just below 20 (all 20s except one 10). The maximum variance is found at a sample of 10 10s and 10 20s. This has a mean of 15, and a sample variance of 26.316, and (not shown below, but very important) a sample standard deviation of 5.130. That’s just over half the range.

library(ggplot2)

n <- 20

combinations <- expand.grid(rep(list(c(10, 20)), n))

means <- rowMeans(combinations)

variances <- apply(combinations, 1, var)

data <- data.frame(means, variances)

ggplot(data, aes(x = means, y = variances)) +

geom_point() +

labs(title = "Means vs Variances of Combinations", x = "Means", y = "Variances") +

theme_minimal()

(For those of you interested in taking this further, the relationship outlined here has been formally defined. A familiarity with the underlying mathematics is necessary to develop and extend these techniques.)

What it amounts to in this context is:

- the standard deviation of any sample has a maximum value of just over half the range

- just over half becomes closer and closer to exactly half when n increases

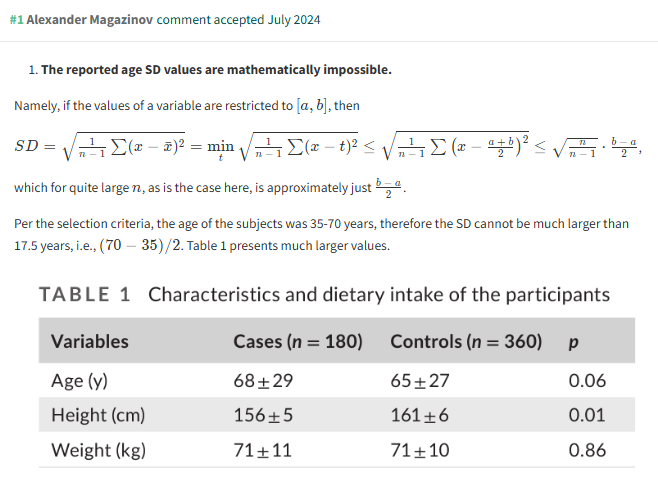

Keep this in mind, and you can spot unusually high SDs at a glance. Here’s an example of Alexander Magazinov using this rule on PubPeer to spot some unusually high SDs in age data. (You can see the derivation in brief at the top of his post, if you’re interested.)

This is a very useful observation, because it’s fast and easy to evaluate at a glance. There are very few forensic metascientific techniques which are this efficient!

For a formal written analysis of data like this, we generally use SPRITE — which we’ll meet later — as it will return other relevant information, like the maximum possible values and the distribution needed to obtain those values.

Recalculation¶

Many reported statistical tests can be recalculated from the test statistics they present. Usually, this is quite straightforward, and this simplicity means that recalculation is usually a forensic meta-analyst’s first port of call for investigating any given paper.

Independent-sample t-tests¶

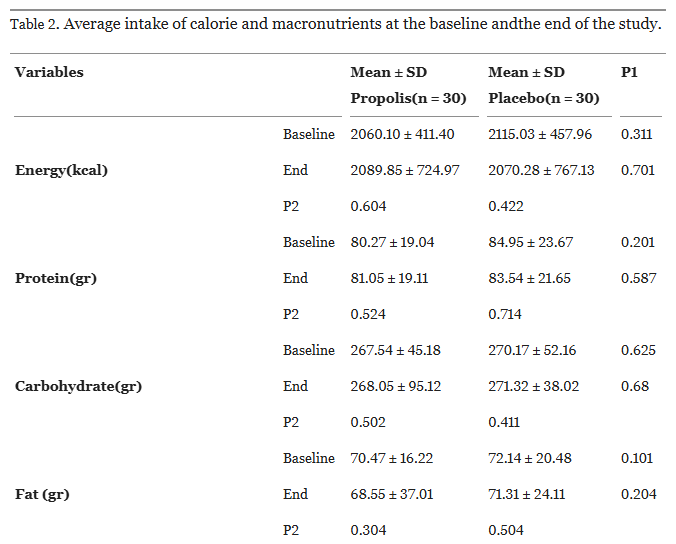

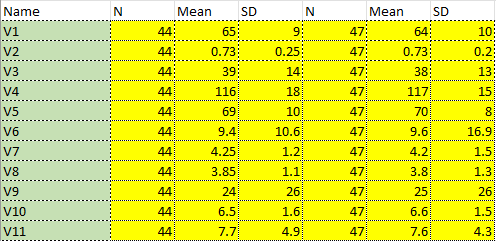

Take this table from Afsharpour et al. (2019), for instance:

Each row is an independent samples t-test, each column is a within-samples t-test.

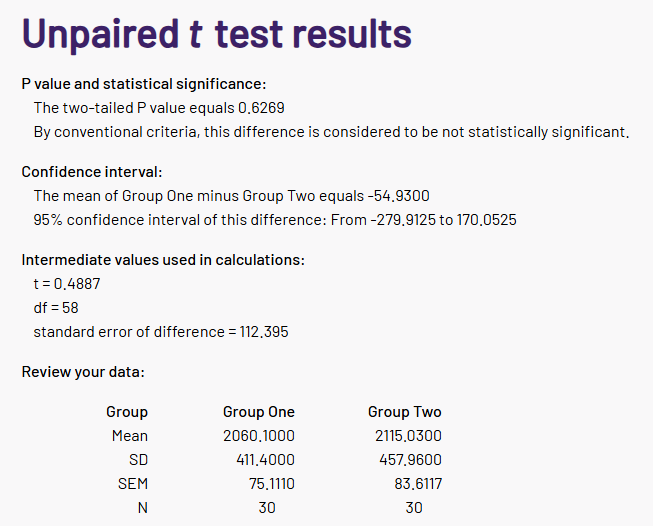

We’ll get to the within-sample t-test in a later section, but for now, let’s recalculate the simpler independent samples test. If we take just the first line of this table (ending p=0.311), and open a quick online calculator, we can reconstruct the test…

… or not.

The paper’s p-value is not 0.6269, it is 0.311. Something is clearly amiss.

This simple recalculation does not require that you already know the t-statistic (although it will usually be reported, and is handy to sanity check proceedings!), because the t-value is simply the difference in the means, divided by the standard error of the difference, and these are calculated from the group descriptive statistics and the cell sizes. However, if the t statistic is reported, you can also check that too (and a similar calculator will do, in a pinch).

To calculate it in R is pleasingly straightforward:

# get library

library(stats)

# define groups

mean1 <- 2060.1

sd1 <- 411.4

n1 <- 30

mean2 <- 2115.03

sd2 <- 457.96

n2 <- 30

# calculate the t-statistic...

t_stat <- (mean1 - mean2) / sqrt((sd1^2 / n1) + (sd2^2 / n2))

# and the df...

df <- n1 + n2 - 2

# and thus the p-value (2 sided)

p_value <- 2 * pt(-abs(t_stat), df)

# Output the p-value

p_valueThis returns the same p-value as our online calculator, just with more decimal places (and the difference between p=0.6268801 and p=0.6269 will become important later!) It also confirms our suspicion that something is amiss with the calculations in this paper.

NOTE: when back-calculating t-tests in greater detail, always remember that a paper may use either Student’s t-test or Welch’s t-test (here’s a discussion about the difference), and then fail to mention which was used. Also, there will often be no discussion about the assumptions of variance — so no clues, either. When in doubt, remember to test both scenarios.

Within-subjects t-tests¶

Above, we saw how easy it was to recalculate an independent samples t-test. Although underappreciated, it is also possible to check elements that are hidden in a within-sample t-test, and it is only slightly more complicated than the between-subjects version.

Happily for us, meta-analysts — the regular kind, not the forensic kind — are very interested in rmSMDs (repeated measures standardized mean differences) for assessing multiple measurements across different papers. In deriving those, they have established some simple statistical procedures we can borrow for forensic analysis. Jané and colleagues (2024) have an excellent expurgation of how this is possible in a range of scenarios, which is worth reading in full if you are dealing with a lot of within-subjects data. You can see the elements described below here directly reflected in their preprint.

Let’s start with a fully worked example. Say we have n=10 within-subjects observations, which represent how well some study participants are recovering from an injury. In this case, let’s say we a measuring some blood metabolite pre- and post-physical therapy, such that:

Time 1 | Time 2 |

|---|---|

10 11 12 13 14 15 16 17 18 19 | 6 15 14 11 9 7 11 18 20 22 |

From this, we can calculate:

- the column means and SDs

- the correlation over time (r=0.662),

- the change scores and their mean, then

- the (change scores - mean change), then finally

- the squared deviation

Table 2:Laying out the within-subjects t-test calculations

Time 1 | Time 2 | Change Scores | (Change - Mean Change) | Squared deviation |

|---|---|---|---|---|

10 11 12 13 14 15 16 17 18 19 M=14.5 SD=3.03 | 6 15 14 11 9 7 11 18 20 22 M=13.3 SD=5.46 | -4 4 2 -2 -5 -8 -5 1 2 3 MEAN = -1.2 | -2.8 5.2 3.2 -0.8 -3.8 -6.8 -3.8 2.2 3.2 4.2 | 7.84 27.04 10.24 0.64 14.44 46.24 14.44 4.84 10.24 17.64 Squared deviation = 153.6 |

So the sample standard deviation is the square root of the squared deviation divided by the degrees of freedom (sqrt(153.6/9) = 4.13…) and, finally, we can calculate the denominator of the t-test, 4.13…/sqrt(10) which is 1.31.

Hence, the t-statistic is -1.2/1.31, which is t=0.92, p=0.382.

But: let’s say we have none of this, and all we have is a far more typical and rather terse description of an experimental outcome:

After 8 weeks of physical therapy, n=10 participants showed no significant difference in their levels of blood metabolites (mean_pre=14.5 (3.03) vs. mean_post=13.3 (5.46), p=0.382).

That doesn’t seem like much, but we can use it to recreate most of the above.

- The mean of all the differences is the same as the difference in all the means, so mean_diff=-1.2

library(stats)

mean_pre <- 14.5

sd_pre <- 3.03

mean_post <- 13.3

sd_post <- 5.46

n <- 10

p <- 0.382

mean_diff= mean_post-mean_pre

# OUTPUT

> mean_diff

[1] -1.2- The p-value allows us to retrieve the t-statistic

# get paired t from p value

paired_t <- qt(p/2, n-1, lower.tail = FALSE)

# OUTPUT

> paired_t

[1] 0.919132- We can retrieve the correlation between the datasets using the newly-calculated t-statistic…

r <- (paired_t^2*(sd_pre^2 + sd_post^2)-n*(mean_post-mean_pre)^2) /

(2*paired_t^2*sd_pre*sd_post)

#OUTPUT

> r

[1] 0.6633034- Then, we can use the correlation and our newly uncovered r value to calculate the sample standard deviation provided above…

sd_change <- sqrt((sd_pre^2 + sd_post^2 - (2*sd_pre*sd_post)*r))

#OUTPUT

> sd_change

[1] 4.131181- … or we could find the same figure (divided by sqrt(10) in this case) by just using the mean difference and the t-value to calculate the t-test denominator

# get t-test denominator from t statistic and mean difference

t_denom <- mean_diff/paired_t

#OUTPUT

> t_denom

[1] -1.30558Any errors or impossibility in these transforms is a big problem — there are no approximations above, only conversions of values which should be precise and straightforward. When you become familiar with the steps, within-subjects t-tests should be almost as fast to recalculate as between-subjects, and just as definitive.

Let’s return to Afsharpour et al. 2019 (as seen previously), where we have a series of within-subjects results. The first one listed is:

Propolis group, Time 1: 2060.10 (411.40), n=30

Propolis group, Time 2: 2089.85 (724.97), n=30 (i.e. no dropouts)

This gives us a p-value of 0.604. Is it correct? Let’s find out.

Again, a within-sample t-test is calculated by taking the mean of the differences (i.e. Time 2 - Time 1 for every participant), and dividing that by the sample standard deviation of the differences, adjusted for the sample size.

We have neither of those figures to begin with, so both need to be retrieved using the code above.

In this case, we can proceed straight to trying to retrieve the correlation between the Time 2 and Time 1 values.

# Retrieving the correlation from the p-value and descriptive statistics in a WS t-test

# Adapted from Jané et al. (2024)

mean_pre <- 2060.10

sd_pre <- 411.40

mean_post <- 2089.85

sd_post <- 724.97

pval <- 0.604

n <- 30

# get paired t from p value

paired_t <- qt(pval/2, n-1, lower.tail = FALSE)

r <- (paired_t^2*(sd_pre^2 + sd_post^2)-n*(mean_post-mean_pre)^2) /

(2*paired_t^2*sd_pre*sd_post)

#OUTPUT

> r

[1] 1.002958Unfortunately for the authors, this returns r=1.002958, which is an impossible value for a correlation coefficient and a common anomalous finding that is possible when checking within-subjects t-tests. Given the between-subjects p-value using the same data is also wrong, you might have an negative opinion of the accuracy present in the rest of the paper (and in this case, you would be correct).

Within-subjects t-tests take a little work to understand, but it is well worth it.

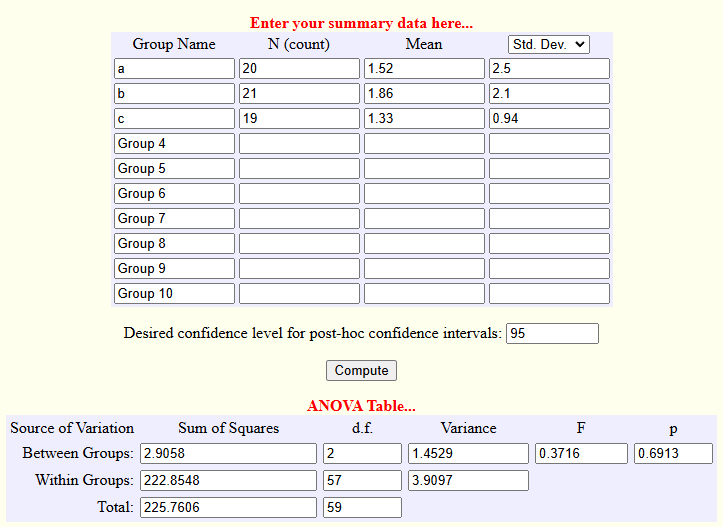

One-way ANOVA¶

One-way ANOVAs are commonly deployed in research that compares more than two groups at baseline, although they have utility elsewhere. There is an online webpage, as there often is, for testing this straight from your browser. Here’s what a sample of what that might look like.

It’s a little unwieldy, so let’s reproduce this in R.

(Note: there is almost certainly a package or function somewhere which does this for you, but I have never located it, as the code below works.)

library(stats)

# Enter the data

means <- c(1.52,1.86,1.33)

SDs <- c(2.5,2.1,0.94)

n <- c(20,21,19)

# Get the # of means and overall mean

k <- length(means)

o.mean <- sum(n*means)/sum(n)

# Calculate the degrees of freedom for between and within

dfb <- k - 1

dfw <- sum(n) - k

# Calculate the mean squares for between and within

MSb <- sum(n * (means - o.mean)^2)/(k-1)

MSw <- sum((n-1)*SDs^2)/dfw

# Calculate the F value

F_value <- MSb/MSw

# And convert that to a p-value

p_value <- pf(F_value, dfb, dfw, lower.tail = FALSE)

# OUTPUT

> F_value

[1] 0.3716134

> p_value

[1] 0.6912792Either method should tell you if your one-way ANOVA of interest is correctly calculated.

You can also calculate the sum of squares, effect sizes, contrasts, etc. but you’ll rarely need to.

Two-way ANOVAs etc.¶

N-way ANOVAs are common, but they cannot be fully reproduced from their summary statistics. You can approximate them using SPRITE, which will be discussed briefly in the section below. However, the F value to p-value conversion can be checked.

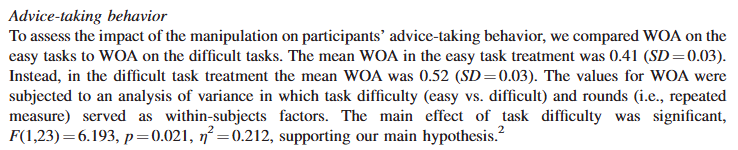

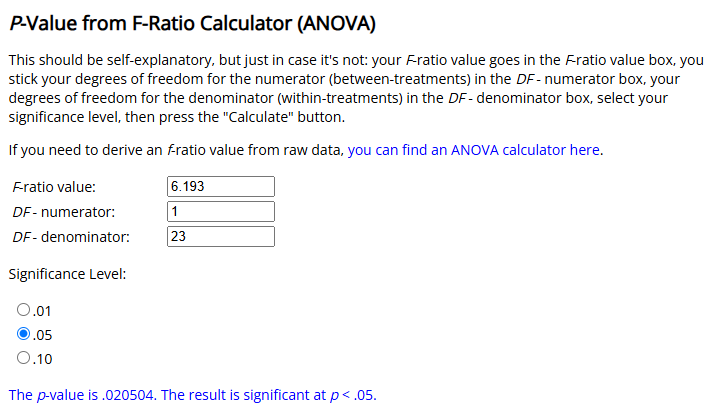

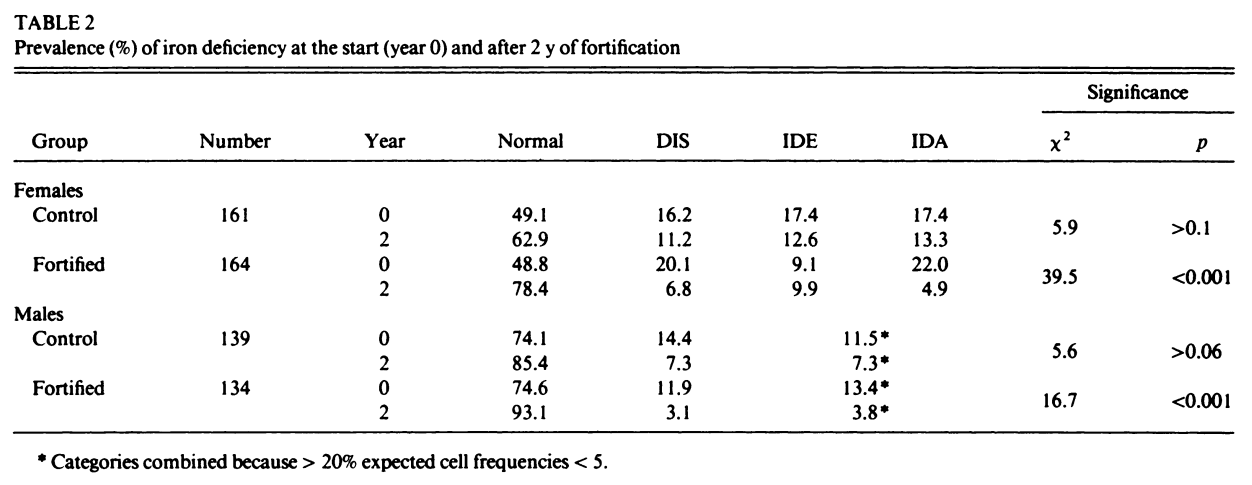

Here’s Gino and Moore (2007):

There are many online calculators that will allow you to check this calculation:

Or you can use a single line of R code, as above.

p_value <- pf(6.193, 1, 23, lower.tail = FALSE)

# OUTPUT

> p_value

[1] 0.02050397While we’re here, an interesting point: the very next paragraph of that paper gives us a situation which means we strongly favour using R to perform these conversions where possible:

An online calculator gives us the same result (<.0001) but that does not tell us what the p value is, just what it is smaller than. R has no such problems:

p_value <- pf(28.55, 1, 60, lower.tail = FALSE)

#OUTPUT

> p_value

[1] 1.486112e-06We will see these sorts of values again later, in the section on the STALT test.

Chi-squared¶

The chi-squared test is the non-parametric workhorse of determining a difference between nominal or categorical variables. It is easy to recalculate, there are several online calculators available, and the relevant R-code is trivial (see below).

There is only one complication: depending on the sample size and research area, what is often simply described by the shorthand ‘chi squared’ or not described at all is usually one of three tests:

- Pearson’s chi-squared test

- Pearson’s chi-squared test with Yates’ correction

- Fisher’s exact test (for a 2x2 comparison)

You can find recommendations for (or against) using each test elsewhere, but for our purposes here, be aware:

(a) researchers tend to think of categorical calculations as somewhat monolithic,

(b) papers can significantly under-report which test was used, and

(c) some software makes decisions about what test / correction to use without user input!

Let’s test a quick example where we might see this tension:

"In City X, a catchment of n=382 people were identified drinking from Water Source A, n=57 of which showed symptoms of infection within the three months of the study. Only n=9 citizens who drank bottled water became ill (n=126) over the same timeframe. The difference was significant (p=0.02).”

Obviously, this is a little slapdash, but it is not particularly unusual amount of brevity.

Either way, we can calculate the cell sizes, and test all three possibilities.

# Put the data in a 2x2 matrix

test_mat <- matrix(c(9,117,57,325), nrow = 2, ncol = 2)

# With Yates

chisq.test(test_mat,correct=TRUE)

# Without Yates

chisq.test(test_mat,correct=FALSE)

# Fisher's Exact Test

fisher.test(test_mat)This gives us three potential p-values.

# Pearson's Chi-squared test with Yates' continuity correction

# data: test_mat

# X-squared = 4.4067, df = 1, p-value = 0.0358

# Pearson's Chi-squared test

# data: test_mat

# X-squared = 5.0715, df = 1, p-value = 0.02432

# Fisher's Exact Test for Count Data

# data: test_mat

# p-value = 0.02247

# etc.I have chosen this example deliberately to be indeterminate, because different recommendations can be readily found for the use of Yates’ correction:

- when a cell size can be expected less than 10, or

- same, but less than 5, or

- never — don’t use Yates’ correction

In other words, in this example we might not get to know exactly what the researchers did. If this was found during analysis, it would be responsible to look for additional examples of a similar test being used in the same paper, or for more information about how the experiment was conducted elsewhere in the paper.

(Note: the above does not include the more esoteric (but still useful) tests, like Barnard’s. However, they should also be recalculable, and you can expect any researcher who uses them with intent to generally do a better job of specifying exactly what statistical method they deployed.)

Checking regression statistics¶

Linear regressions are often reported a little more comprehensively than other statistics, most likely because they require additional information to be interpreted. Subject to assumptions, the B value, SE, t-value and p-value all exist within a straightforward interrelationship, as:

Specifically: the unstandardised regression coefficient (B) divided by the standard error (SE) equals the t-value, (t) and the p-value shown should be congruent with that t-value (see above). The Free University of Berlin has an excellent explainer of the basic underlying statistics.

Other recalculable statistics¶

Other metrics are able to be recalculated from the text where they are cited, but they are less frequently seen and trivial to re-calculate, the most popular examples being the Q-value (pFDR) and good old-fashioned Z scores.

StatCheck¶

Now, given the amount of tests listed above, you can probably see how just ‘checking all the tests quickly’ can be anything but quick if a paper is long and dense enough. What happens if you have dozens or even hundreds of individual t-tests, chi-squared tests, regression analyses, etc. that need rechecking, or if you have tests liberally salted across dozens or hundreds of pages of text?

That requires a lot of typing, and you may make a mistake yourself! That’s where StatCheck comes in.

StatCheck is one of the first forensic metascientific tools available to the public, and — as far as I’m aware — was the first automated tool analysts had available. It extracts and automates all of the forms of recalculation above straight from a PDF file. Specifically, it recalculates t, F, χ2, Z and Q values relative to their presented p-values and degrees of freedom.

To run it, you simply insert a PDF. When I run it locally in RStudio, this is all the work of:

install.packages('statcheck')

library(statcheck)

checkPDF("C:/Users/james/Downloads/paper_in_question.pdf")But you don’t even need R locally installed, because the same code will run through a Shiny app here. It’s amazingly convenient.

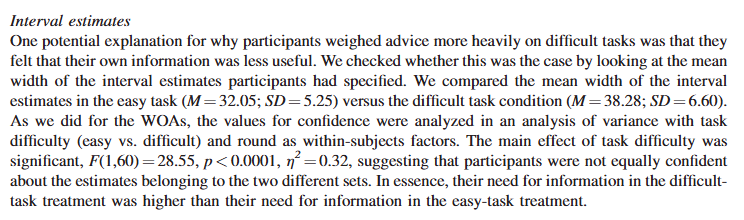

StatCheck output looks like this:

(In this example, the analyzed paper is dealing with very large population-level statistics, and the few results that are checkable are very large t statistics, computing infinitesimally small p-values. So, it all looks good.)

Unfortunately, StatCheck is also somewhat limited as a tool. It is not good with different publication formats, tables, or any esoteric presentations of even the simplest data — it works on American Psychological Association (APA) formatted papers only.

So, unless you are interested in papers within certain subsections of the social sciences, it will not be an immediate help. But when you have a correctly formatted paper, it is tremendous, and will save you a lot of time that otherwise would be spent on recalculation.

GRIM¶

Assessing means¶

GRIM stands for ‘granularity related inconsistency of means’. GRIM is a simple observation: given that many means are fractions consisting of a whole number divided by a whole number, a lot of decimals can’t exist as means.

GRIM is a quick and easy evaluation to make, especially considering it is available as an R function within the scrutiny package. Say we have 30 people who answer the question: “How do you feel today?” from 1 (terrible) to 7 (excellent) — in whole numbers, no partial numbers or fractions — and a paper reports a mean of 3.51.

This is impossible, and thus we can determine the stated result ‘fails GRIM’.

GRIM is already available in the R package ‘scrutiny’. Once that is available via:

install.packages("scrutiny")

library(scrutiny)Then the ‘grim’ function can be called in a single line, specifying the value of interest and the sample size:

> grim("3.51", n=30)

3.51

FALSE Why false?

Because the answer is a fraction: X/30, where X is the sum of the answers to the ‘feeling’ question, and 30 is the number of people who were asked it. We can confirm this with primary school mathematics: it is possible for the answer to be 3.5 or 3.53, but not 3.51.

> 105/30

[1] 3.5

> 106/30

[1] 3.533333With a little bit of inference, we can speculate on exactly what went wrong.

For instance, maybe someone left the question blank, and the denominator isn’t actually 30? Perhaps there’s a single missing value (n=29) or maybe two (n=28)?

> 101/29

[1] 3.482759

> 102/29

[1] 3.517241

> 98/28

[1] 3.5

> 99/28

[1] 3.535714Neither of these produce 3.51 (but 102/29 would round to 3.52… if that was the solution, the authors would have failed to round correctly).

What if, against instructions, a participant put ‘3.5’ as how they feel?

> 105.5/30

[1] 3.516667Also not possible — that’s 3.52.

What if some smart alec put a silly answer, like ‘3.3333’?

> 105.3/30

[1] 3.51Well, that works perfectly. We should expect this, because if you allow the answer to the item in question to be any decimal, GRIM doesn’t work at all as any eventual mean is possible.

The ‘silly answer’ scenario is actually an analytical possibility you may have to consider — we have encountered this before in the wild when requesting data! But also consider that a silly answer might be straightforwardly impossible if, say, the question was answered on a computerized form that made you click a radio button corresponding to the digits of 1 to 5. There is no way that could be anything except an integer.

(Details like this are one of the many reasons why analysts get annoyed by papers that do not clearly specify their methods!)

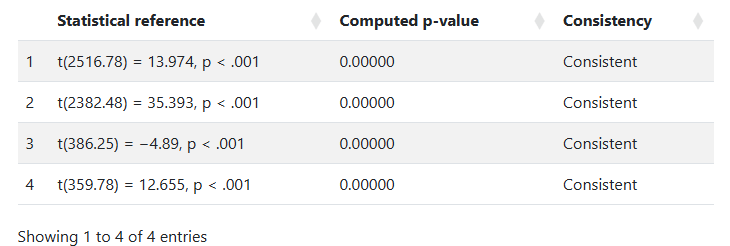

The first preprint on GRIM was published in 2017, and we have used it to find a steady stream of means which can’t be explained since then. The most interesting cases are when people outside the forensic metascientific community are using the test for their own purposes — one recent example is from Bauer and Francis (2021), an expression of concern resulting from a report by Aaron Charlton to the journal Psychological Science. This EOC reports working through exactly the same logic we saw above:

In this table, which is only a small part of the analysis, the authors analyzed a series of reported means, and found an anomaly. After doing so, they found several more (not shown). Eventually, the paper was retracted.

Reconstructing prevalence, and introducing percentages¶

We saw briefly above that the GRIM method (a) might find a mean / sample size pair that is impossible, and that (b) sometimes, we can also make a realistic attempt at discovering what the value should be. This is a useful observation, so in this section we’ll make it more systematic.

However, the concept is still simple: we simply run lots and lots of GRIM tests on a series of means, rather than manually working through testing a series of potential GRIM values, such as:

> grim(x = "5.27", n = 43)

5.27

FALSE

> grim(x = "5.27", n = 44)

5.27

TRUE

> grim(x = "5.27", n = 45)

5.27

TRUE

> grim(x = "5.27", n = 46)

5.27

FALSE

> grim(x = "5.27", n = 47)

5.27

FALSE We test for all of these, at once, for multiple values calculated from the same sample size.

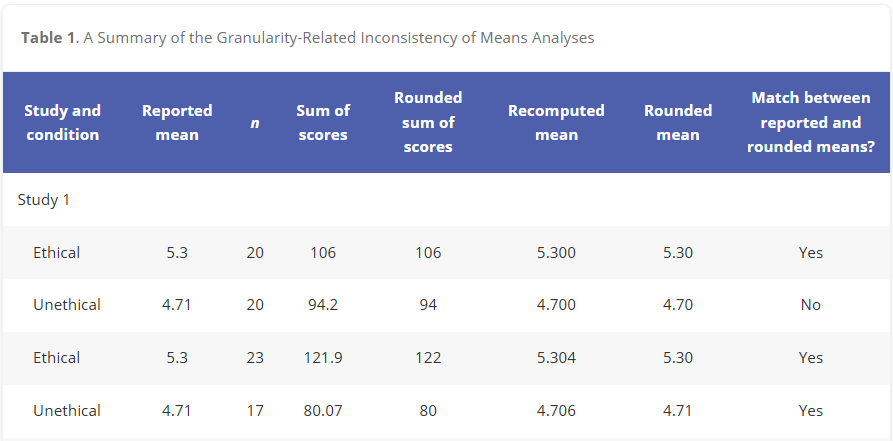

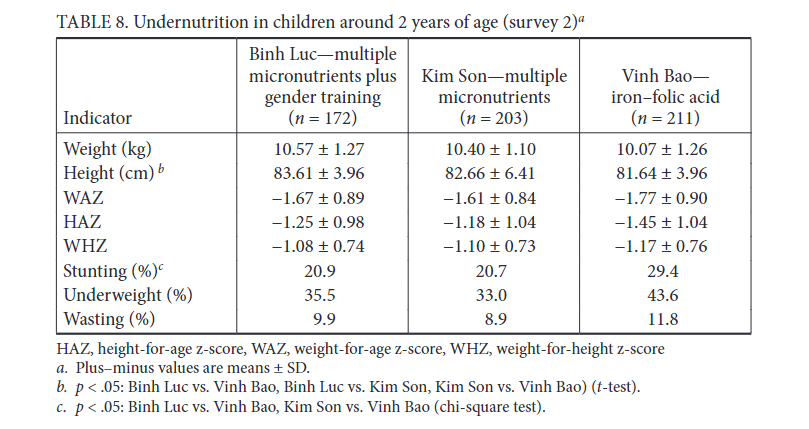

Figure 13:Table 2 from Ballot et al. 1989

We previously met Ballot et al. (1989) in the “Simple numerical errors: Summation” section, and flagged it because the paper never reported the initial cell sizes.

After that, we found an incredibly confusing reporting structure to the cell sizes in our initial analysis, where there were several stated or implied cell sizes, and all of them were different. Now, we’ll attempt to recover what those cell sizes might be.

A key observation here: percentages are also subject to the GRIM test, and they are more powerful than means. When analyzing means, GRIM is generally viable to use only when the number of interest is reported to at least 2 decimal places. But if a typically reported percentage is similarly rounded (i.e. a paper describing a mean of 5.62 and a percentage of 72.71%), then we have 4 decimal places, to play with, not two.

In GRIM terms, a mean reported as 0.85 is substantially inferior to a identical percentage with the same 2dp rounding (for instance, 85.39%). The same applies for 1dp rounding, where we can deploy GRIM with not 1dp but 3dp if a percentage is involved.

This is the case for Ballot et al. (1989). The first row of the table above lists four percentages, all of which need to be successfully recreated from fractions of 161 (49.1% of 161 must be a whole number, 16.2% of 161 must be a whole number, etc.) The easiest way to do this quickly is to test every reasonable sample size by simply using GRIM as many times as necessary. These samples are very likely the same or smaller than the stated sample size — less than 161 datapoints may be present, but more is very unlikely.

Again, as each proportion is mathematically identical to [0,0,0,0…1,1,1,1], we can simply test what amounts to the following for each proportion, and retain only the TRUE results as possible:

> grim(x = "0.491", n = 161)

0.491

TRUE

> grim(x = "0.491", n = 160)

0.491

FALSE

> grim(x = "0.491", n = 159)

0.491

TRUE

> grim(x = "0.491", n = 158)

0.491

FALSE

> grim(x = "0.491", n = 157)

0.491

FALSE

> grim(x = "0.491", n = 156)

0.491

FALSE And so on. Rather than doing this manually, we use sapply.

library(scrutiny)

results <- sapply(1:161, function(x) grim("0.491",x))

true_values <- which(results == TRUE)

true_values This leaves us with all the potential sample sizes for the first proportion, which are n=:

53 55 57 106 108 110 112 114 116 159 161 And checking the other two (grim(“0.162”,x) and grim(“0.174”,x) gives us n=:

37 68 74 80 99 105 111 117 130 136 142 148 154 160

23 46 69 86 92 109 115 121 132 138 144 149 155 161 And thus, our diagnosis: (a) n=161, the cell size for the row, is incorrect; and (b) there is data missing haphazardly within the samples, as there is no single n which can produce all three proportions. Every value fails the GRIM test at least once for every possible n.

This makes it very hard work (and presumably not of any great interest!) to try to re-create the chi-squared value provided in the table, as we have absolutely no idea what any of the cell sizes are for the items. If this was a modern paper, and on a subject of interest, this is the point where you would request the data from the authors.

Regardless the point remains: percentages of any binary yes/no answer are a great place to think about using GRIM.

Reconstructing contingency tables¶

Closely related to the above, we can also reconstruct contingency tables from row or column summaries, even if the reporting has a lot of the information removed. Take the following, which has a lot of the relevant information removed, and is then described in text:

INTERVENTION | CONTROL | ||

|---|---|---|---|

SICK | A | B | (A+B) |

WELL | C | D | (C+D) |

Total | (A+C) | (B+D) | (A+B+C+D) |

“The first dependent measure showed that 63.7% of participants in the intervention were well, compared to 44.9% in the control group (χ2(1,179) = 6.407*, p=0.011).”*

It was common in the social sciences, even comparatively recently, to report data in this very brief and featureless manner. This may be variously because of low standards in the field, or the ignorance of the authors, or sometimes as an active attempt to resist re-analysis.

Either way, this barrier can be defeated — we can check the χ2 calculation (see above), and GRIM can completely reconstruct this table by finding mutually plausible proportions.

To begin with, we know (A/A+C) = 63.7%, and (B/B+D)=44.9%. The total sum of all participants is 180. And, as for context we will test the entire range of values, we are also reasonably sure that the two groups are likely quite evenly balanced, as they were randomly assigned.

Thus, we can apply GRIM to the percentage figures given, using the same code given above.

Possible values for A are:

0.637 0.637 0.637 0.637 0.637 0.637 0.637 0.637 0.637 0.637 0.637 0.637

80 91 102 113 124 135 146 157 160 168 171 179 (Note: always check these solutions manually, because R does not round values in the traditional manner! In this case, the solution at n=80 is 0.6375 exactly, which we would typically round to 0.638.)

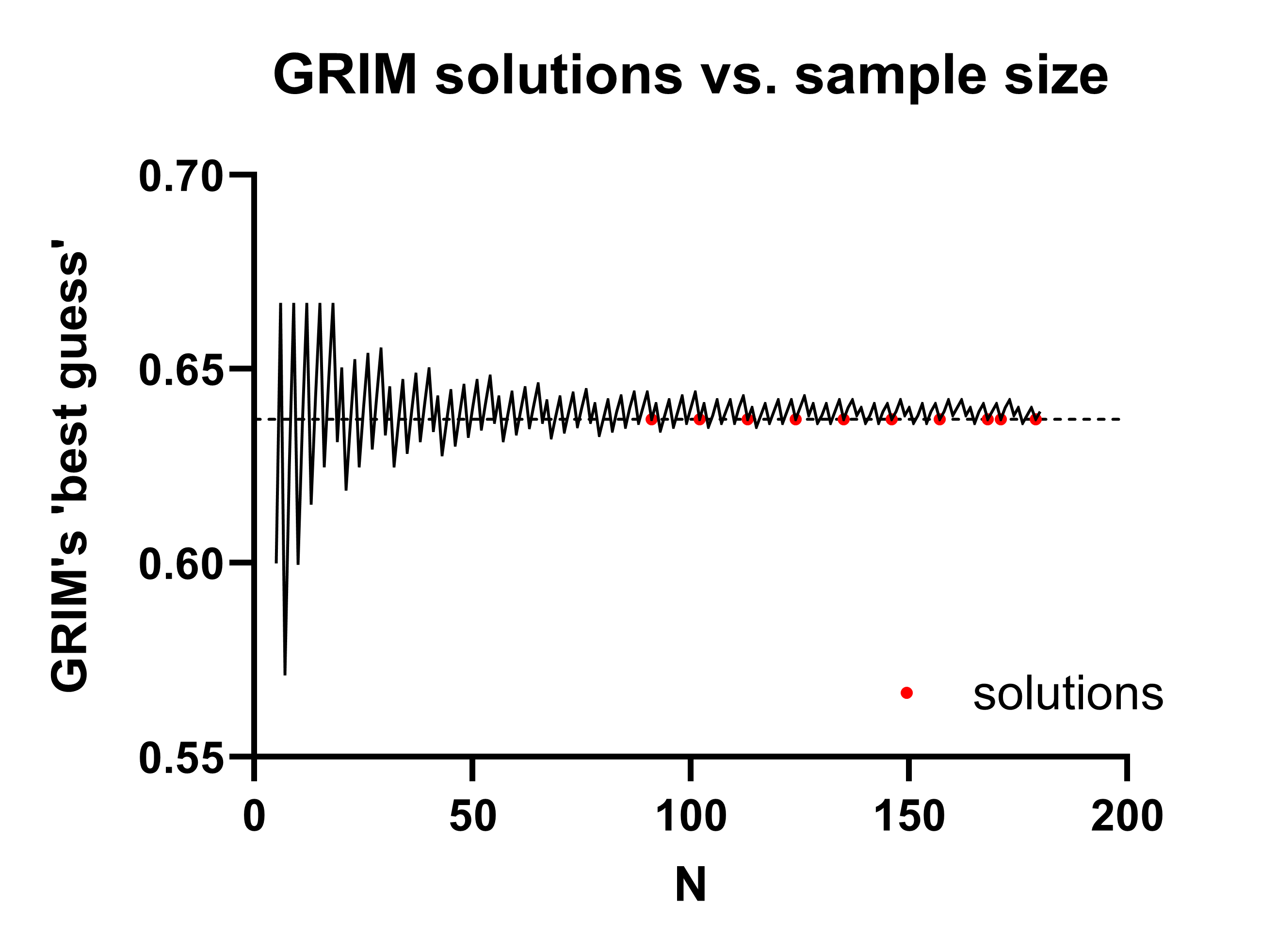

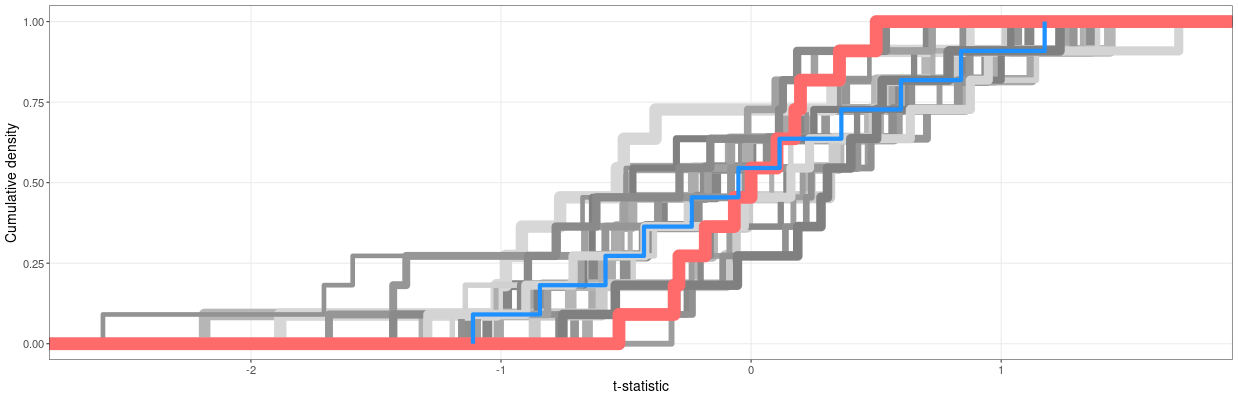

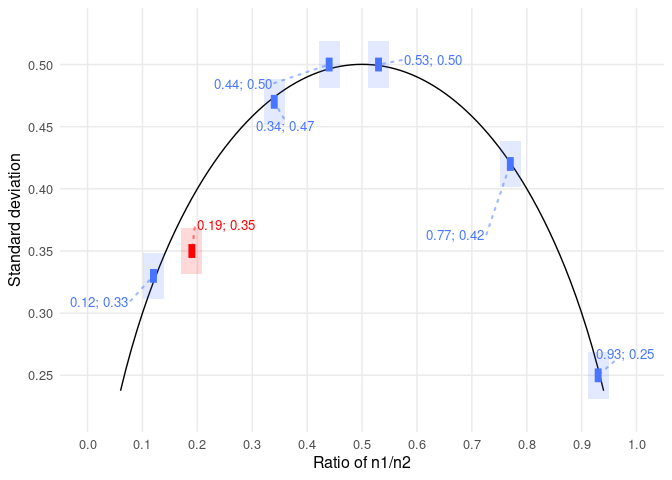

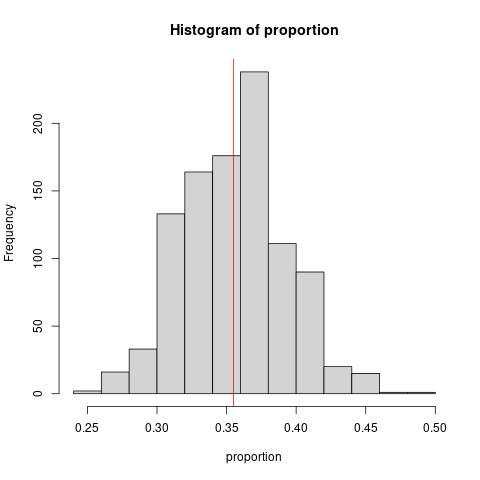

If we visualize GRIM’s ‘best guess’ for any given sample size, you can see the solutions eventually start to become more common as the N increases. In this case, the first solution is the first red dot below, at n=91.

This is the only realistic solution in this example (the next solution, A+C=102, would imply B+D=79, which would be incredibly unlikely with a regular randomization procedure). Thus, A+B = 91, thus B+D = 89, at which point we can simply fill out the rest of the table:

INTERVENTION | CONTROL | Total | |

|---|---|---|---|

SICK | 58 | 40 | 98 |

WELL | 33 | 49 | 82 |

Total | 91 | 89 | 180 |

There are two further points to keep in mind here:

(1) Other examples may require more testing than this one, but it is common that GRIM can narrow down a sample size quickly to only a few possible cell sizes. If many potential solutions are found, the procedure is only slightly more complicated: then you recreate the statistical test using the potential solutions.

(When you do so, bear in mind that you may not know what test was used! Again, see the χ2 section above: the statistic may be calculated with Fisher’s Exact Test, or a chi-squared test of independence, which in turn may or may not use Yates’ Correction. So there are often three tests to try, unless the paper specifies exactly which to use.)

For more detail on an example like this, see my 2024 preprint.

(2) The number of decimal places provided strongly affects how many solutions you can generate. In the example above, with an assumed cell size ~90, there are many more solutions for 64% than there are for 63.7%. More decimal places in the reporting and smaller cell/sample sizes are required for this test to be effective.

RIVETS, and assessing ‘hand curated’ statistical tests¶

RIVETS stands for Rounded Input Variables, Exact Test Statistics. It’s something of a niche test, but it’s a very interesting observation. When it proves useful, it can be quite definitive.

RIVETS was originally conceived by Nick Brown to check for hand-calculated statistics, which it does in a very clever way — it looks at the likelihood that a test statistic is exactly equal to its calculation from truncated values.

That sentence is a little dense, so let’s use an example.

At baseline, the drug group (M=1.71, SD=0.22, n=25) was slightly taller than the placebo group (M=1.63, SD=0.40, n=25) but the difference did not reach significance (between-subjects t-test, t=0.876, p=0.385).

There is nothing incorrect about this test. It is correctly calculated. But it has an unusual feature.

In continuous data, decimal tails for any given value are usually quite long. When the mean and SD are truncated to 2dp, as they often are to be reported in a scientific paper, that information is redacted. In other words, papers typically present an approximation of statistical tests. In the above, we are comparing two groups which are actually:

Drug (M=1.705 to 1.714999…, SD=0.215 to 0.224999…, n=25) vs.

Placebo (M=1.625 to 1.634999…, SD=0.395 to 0.404999…, n=25)

If we encounter these descriptives in the text, we do not have an exact value for t and p, but rather ranges. And while the absolute differences between the potential hidden values may be small, they are almost always big enough to affect the precise value of the test statistic and the p-value! For instance, if we take the largest possible difference between the means (so, the highest version of the higher mean, and the lowest version of the lower mean), and the smallest possible standard deviations, we will produce the maximum possible test statistic and smallest possible p-value. The opposite is also true.

Our shorthand in this case is simply to prefix them with min and max. The nomenclature takes a little getting used to, but it dramatically simplifies things in the end.

Thus, the max difference between these two groups is found at:

1.71max (0.22min) vs. 1.63min(0.40min) thus t=1.0006, p=0.3220

And the min difference is found at:

1.71min(0.22max) vs. 1.63max(0.40max) thus t = 0.7554, p=0.4537

Even with these sub-2dp changes to the mean and SD, the t-statistic has changed by around a third.

So what does RIVETS do? It identifies the likelihood of the test statistic being exactly at the truncated values. It does this by assuming by sampling means and SDs anywhere within their possible intervals (so, for the first mean in the above, that would be anywhere between M>1.705 and M<1.715) and calculating how common it is for the test to return the exact p-value reported. Full R code to do this can be found in the RIVETS test on OSF, here.

Why would we care if the test statistic and/or p-value were calculated from truncated descriptive statistics? Because that’s how a researcher would hand-calculate them. Most people would simply use 1dp or 2dp if putting the values into a calculator. If a whole series of results is hand-calculated, it is often good evidence that someone was manually tinkering with the values.

(Note: RIVETS was a seminal observation about how uncertainty and data reporting standards. While it may not be deployed often, it contains a core principle that is critical to understand as the same calculation, used in the same way, can be used to establish the maximum and minimum possible test statistics. We will see this in a future section.)

GRIMMER¶

GRIMMER is GRIM but for standard deviations (GRIM + ‘Mapped to Error Repeats’). It’s sufficiently different to justify its own section, but it’s also included here after RIVETS (see above) for a reason.

The original idea was Jordan Anaya’s but the derivation we’ll use here was first outlined by Aurien Allard in 2018, and it’s extremely clever. I’ve borrowed his MathJax code here to show you the implementation.

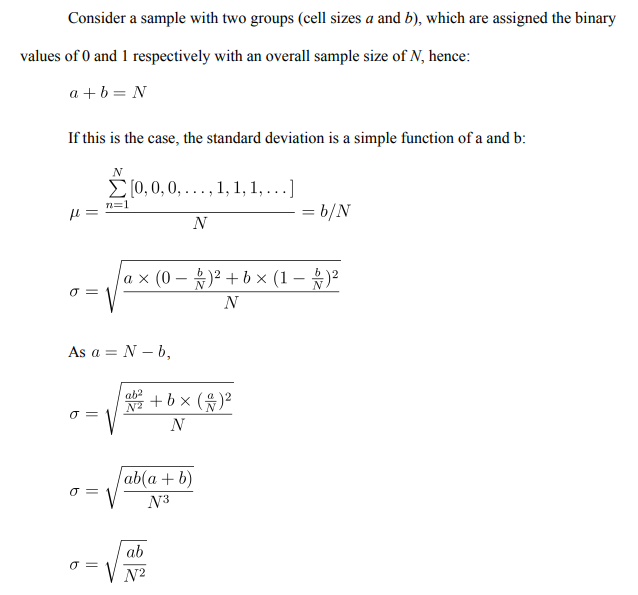

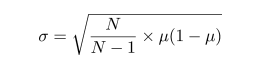

To start with, we can take this standard definition of the sample variance:

If we expand the top line, we see:

But, the second term there is the sum of all the included values, as in, the numerator of the mean!

i.e.

So, if we rearrange and collect all of the above, we finally get:

And the final observation: the sum of all the squared whole numbers (i.e. the left hand side) is itself, obviously, a whole number, and thus, so is:

It’s awkward to write out in words, but it makes it even more approachable: (degrees of freedom * variance) plus (n * the mean squared) must be a whole number.

A function for this is also in the scrutiny package (see above) and has documentation here.

Let’s do a simple manual example in the meantime, though.

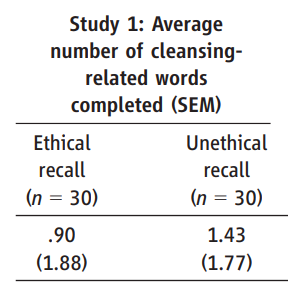

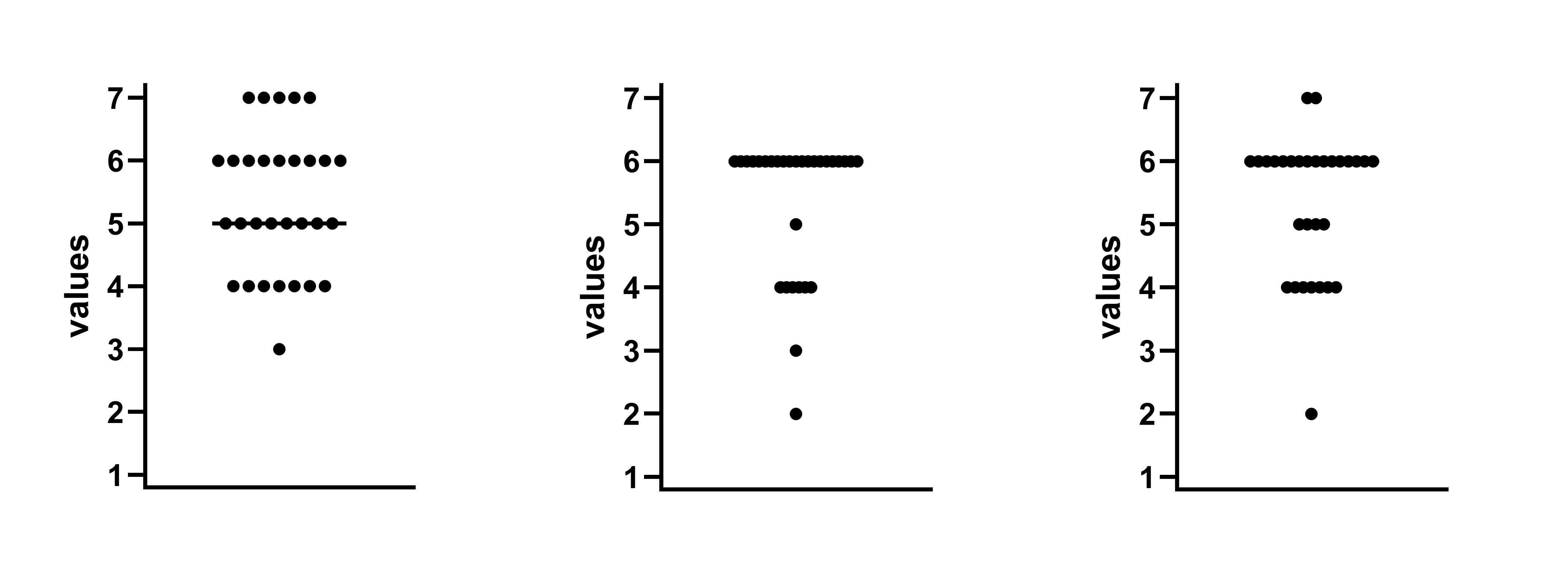

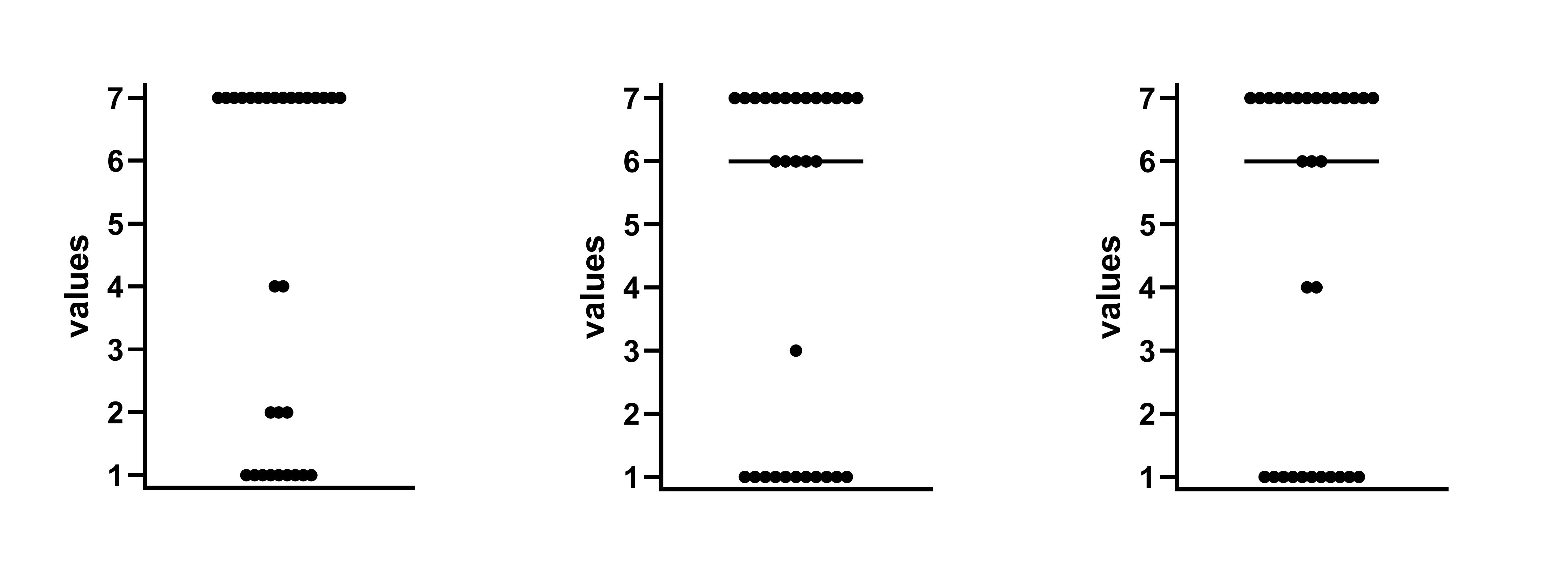

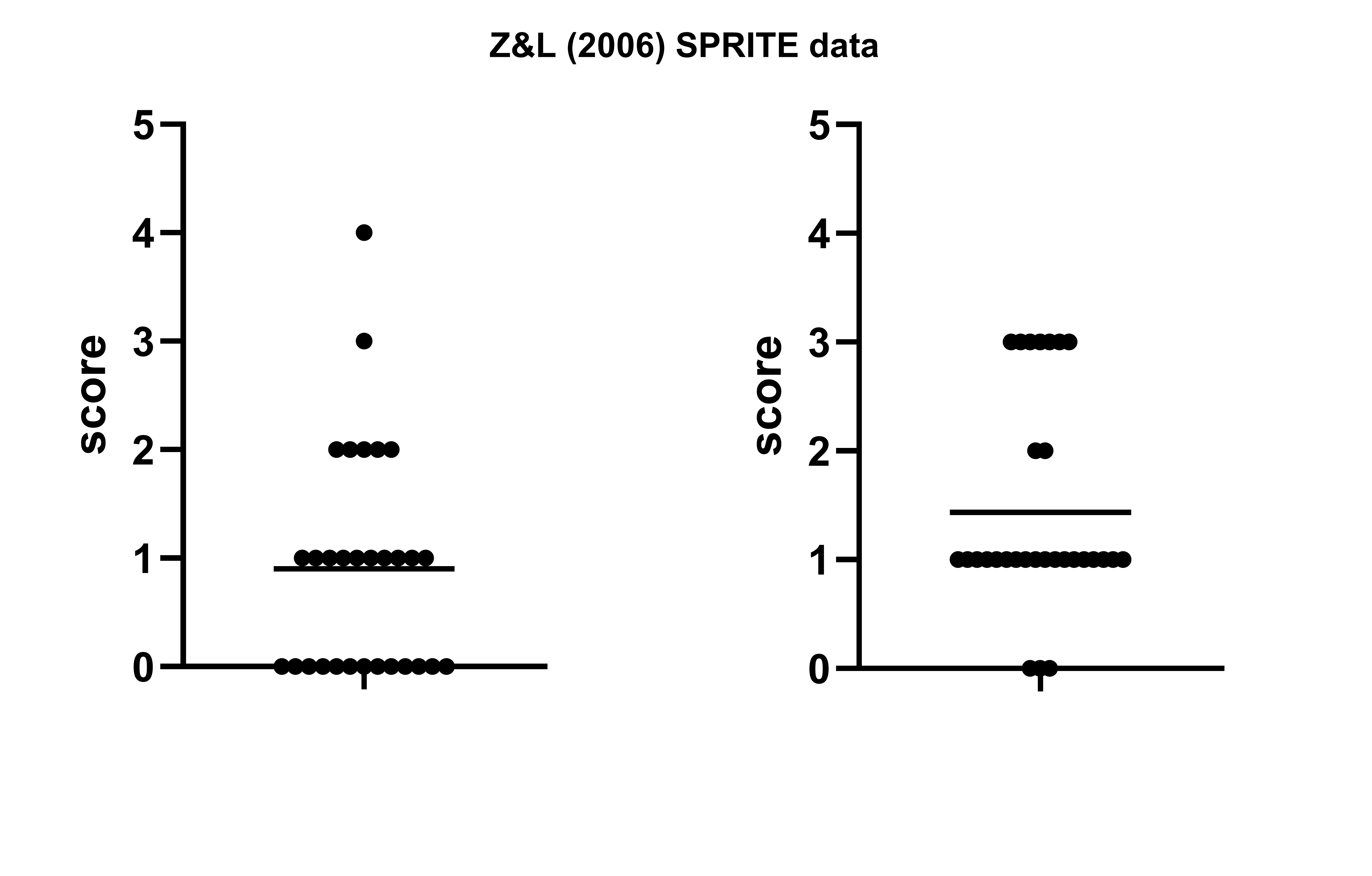

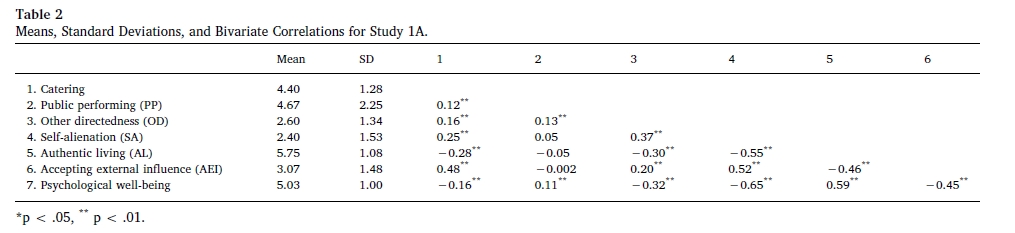

Previously in the GRIM section, we saw Bauer and Francis (2021) analyze a paper by Banerjee et a. (2012) which was later retracted. But that paper relied heavily on a previous report in Science which used a similar task, by Zhong & Liljenquist (2006).

This is a good paper to analyze for a few reasons: (1) it has over 1600 citations, which means it probably SHOULD be checked; (2) it measures ‘average number of words’ returned on a task — a good source of integers to test, as there is no possibility that a ‘half word’ could be reported, and (3) it has an annoying conversion from SEM to SD that presents an extra challenge. When I approached this paper, I initially assumed that there was a typo, and that 1.88 was the SD, NOT the SEM — if this was accurate, the SD would be equal to 1.88*sqrt(30), which is ~10.3 and clearly impossible.

The code to resolve GRIMMER is extremely simple:

n <- 30

SD <- 1.88

mean <- 0.90

(n-1)*SD^2 + n*mean^2

## OUTPUT

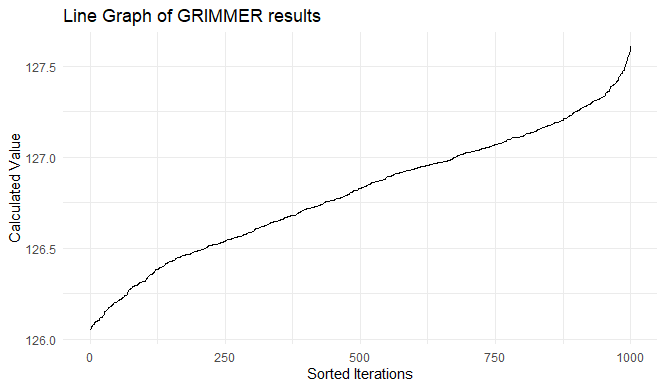

[1] 126.7976… and we’ve hit a big snag, which is the issue of reporting precision. This is why this section is included after the RIVETS section above, as exactly the same issue is relevant again — we have a range of potential means and potential SDs, rather than precise values.

But this is not a setback, rather, this is where GRIMMER starts to get really interesting. Why? Because (a) we can work around our precision loss by adding our potential decimal tails back in, and look to see if there’s any whole numbers in our possible range, and (b) having identified a whole number solution, we can potentially produce very accurate answers about what our original numbers actually are!

library(ggplot2)

## set up the basic parameters, and a place to park the results

n <- 30

SD <- 1.88

mean <- 0.9

results <- numeric(1000)

## spoof in the imprecision for each calculation, and calculate

for (i in 1:1000) {

random_SD <- SD + runif(1, -0.005, 0.005)

random_mean <- mean + runif(1, -0.005, 0.005)

results[i] <- (n - 1) * random_SD^2 + n * random_mean^2

}

## sort the results, so they don't look like a messy squiggle

results_sorted <- sort(results)

## place results on a single line graph

ggplot(data.frame(x = 1:1000, y = results_sorted), aes(x = x, y = y)) +

geom_line() +

labs(title = "Line Graph of GRIMMER results", x = "Sorted Iterations", y = "Calculated Value") +

theme_minimal()Executing the above, we produce a graph that looks like this:

And we can confirm that the sum of the squared values (the left hand side of our GRIMMER equation) is overwhelmingly likely to be 127.

In the past, we have never needed any further analysis beyond this, although a future extension certainly is possible: it might be possible to prove that some combination of:

- the mean

- the standard deviation

- the sample size, and

- the sum of squared values

… is impossible i.e. that any given sample cannot form the identified sum as an integer while maintaining the defined sample properties!

(However, an epilogue in this case: we will see later in the SPRITE section that the assumption we made above, that the SEM was stated incorrectly, was correct… but our assumption of how it was incorrect was wrong.)

Tests for p-values¶

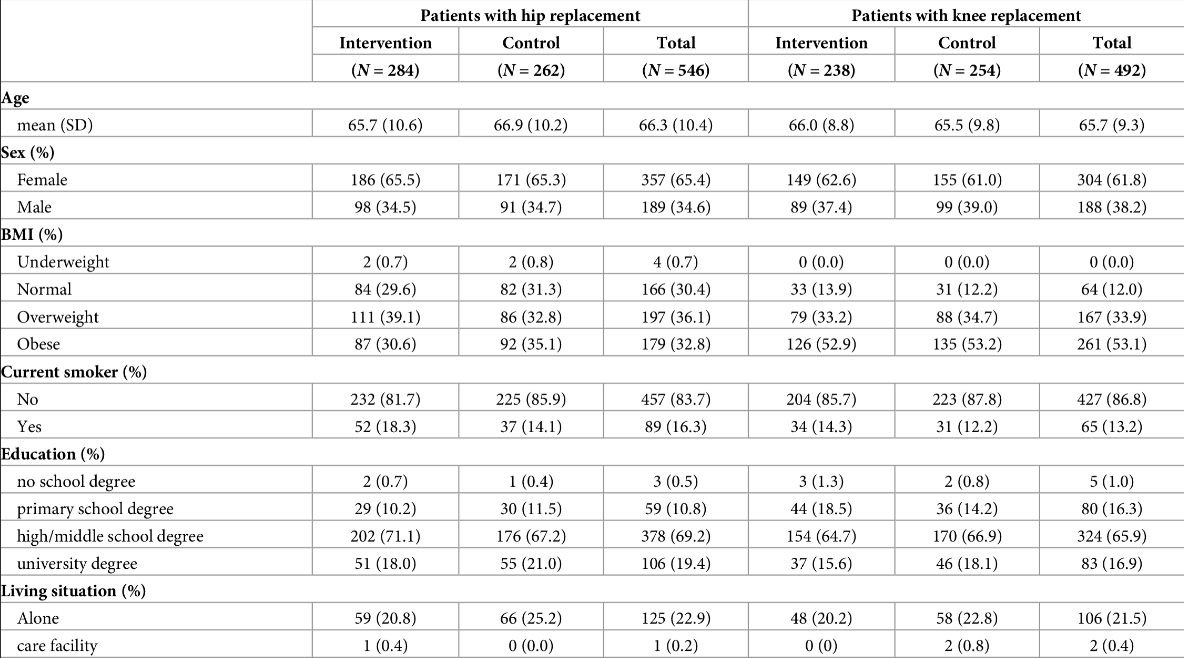

In medical research in particular, when multiple samples are compared, it is standard practice to lay out a ‘Table 1’ which describes the baseline characteristics of the 1 or more samples included in the research. Here is an example:

Figure 17:A paper chosen completely at random. “Cost-effectiveness of a patient-reported outcome-based remote monitoring and alert intervention for early detection of critical recovery after joint replacement: A randomised controlled trial.” Schöner et al. 2024. Schöner et al. (2024) Note that in this case the ‘Table 1’ is actually Table 2.

With the data laid out in this format, we have a certain degree of transparency that allows us to get a holistic sense of the research participants. We see both strong similarities (say, the age of an experimental sample vs. a matched control) and strong differences (say, the pancreatic enzymes of a sample with pancreatitis vs. a matched control).

Having established this picture of the dataset, researchers often proceed to analyzing it, and a lot of statisticians are very vocal that they shouldn’t. Since Rothman (1977), statisticians have criticized the row-wise analysis of these numbers to give it a name: The Table 1 Fallacy.

From a recent paper (Sherry et al. 2023): “… engaging in significance testing of baseline variable distributions after sound randomisation is not informative, since any baseline variable differences following unbiased randomisation are already known to be due to chance; in other words, significant findings are, by definition, false positives.”

This is perfectly sensible, but the language above in bold is important: there is no fallacy if the numbers included are incorrect, mistyped, or fraudulent. Using the Bartlett’s Test and the STALT test, we can analyze any congruent p values, no matter what test produces them. However, these are typically used to analyze Table 1 values, and both raise serious red flags if the tests are significant on Table 1 values in particular.

(Thus, while many medical journals in particular are moving away from this rather nonsensical analysis of Table 1, we are in the strange position of knowing that it is technically uninformative to report statistics calculated row-wise from a Table 1, but but also hoping that authors and journals report these uninformative statistics as often as possible, because these ‘meaningless’ tests give us a window into the underlying trustworthiness of the paper.)

Assessing p-values with STALT¶

STALT stands for ‘Smaller Than A Lowest Threshold’. This is barely a technique, more of an observation about recalculated test statistics (see Data Techniques: Recalculation). However, it is important enough that it gets its own section, and has its own preprint as well (Heathers and Meyerowitz-Katz, 2024).

Often, statistical results of simple tests are reported like this:

Using a between-subjects t-test, Group A (mean=5.5, SD=2.1, n=125) was confirmed as smaller than Group B (mean=10.1, SD=1.9, n=131), p<0.05.

This is all correctly calculated (according to NHST theory, at least), but it is almost lying by omission. A crucial detail has not been included — how much smaller the p-value is than 0.05.

Reporting like this might lead people to imagine that the result presented might be p=0.03, or maybe p=0.01, or p=0.008. It isn’t. As we’ve now established that it’s easy to re-create between-subjects t-tests, calculate the above now yourself: what might the p-value be?

From the above we can calculate t=18.79 and df=254, thus the actual p value is: ~3*10-50.

In some experimental contexts, this is extremely unlikely. In other contexts, p-values can be legitimately very small — but in those areas (e.g. genetics) they are not typically reported as ‘p<0.05’ but in scientific notation, e.g. ‘p=3.7e-10’.

That being said, an analyst needs to use a good deal of subjective judgment as to whether or not an extremely small hidden p-value is problematic.

To deal with STALT values, you need to either use software that allows you to calculate p-values analytically (R, as we saw above, works just fine), or use an online calculator capable of returning the long rather than the truncated answer of a statistical test (a good one is available here).

As might be expected, we generally but not exclusively see STALT errors in large-but-hidden treatment effects. They can also be spotted in large baseline differences between intervention and control groups occasionally.

EXAMPLE OF A STALT VALUE IN AN UNUSUAL TREATMENT EFFECT¶

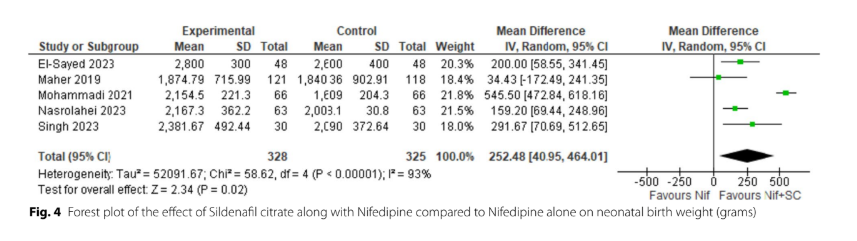

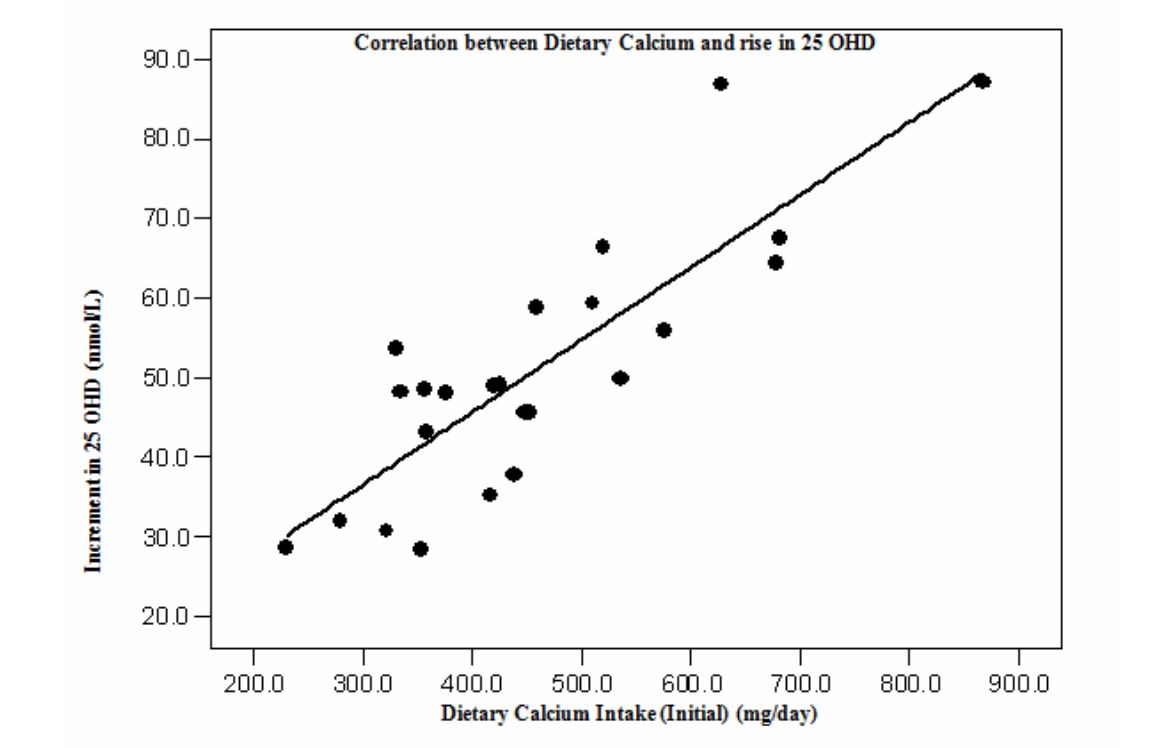

The paper “Comparison of the Effect of Nifedipine Alone and the Combination of Nifedipine and Sildenafil in Delaying Preterm Labor: A Randomized Clinical Trial” by Mohammedi et al. (2021) was passed along to me in a conversation about trial accuracy results, after the below raised a flag:

Figure 18:Manouchehri et al. (2024). Always question forest plot outliers!

Meta-analyses are great places to find flags, because someone has done the hard work of assembling all the effects for you in one place. This forest plot above tells us that Mohammadi et al. (2021) — the study on line 3 — found that a drug combination given during pregnancy when there is a risk of premature delivery increases the baby’s weight by more than a pound. In a four pound newborn that is worth questioning, because if it’s true, it’s an amazing win for pediatric health.

The birth weight data shown above increased (presumably) because the amount of time for gestation during the study period also increased (16.17 ± 5.14 days in the intervention group, and 9.98 ± 3.50 days in the control group). Both groups are n=66, and the p-value is given as <0.001.

Here, we can recalculate the p-value using the same code as the above section on t-tests:

library(stats)

mean1 <- 16.17

sd1 <- 5.14

n1 <- 66

mean2 <- 9.98

sd2 <- 3.50

n2 <- 66

t_stat <- (mean1 - mean2) / sqrt((sd1^2 / n1) + (sd2^2 / n2))

df <- n1 + n2 - 2

p_value <- 2 * pt(-abs(t_stat), df)

p_value

# OUTPUT

[1] 3.734256e-13This is extremely concerning for the accuracy of the paper. Having found the above, there were unfortunately several more untrustworthy data features.

Two logical additions to STALT are (a) using a random sampling method to estimate how substantial a difference between two or more groups have to be in order to produce the published result, and (b) where possible, calculating an effect size.

An interesting wrinkle of STALT values is that researchers themselves may be producing them by accident. Many statistical platforms only offer truncated p-values, so it is entirely possible that if researchers input unlikely, inaccurate, or fake data to their calculation method of choice and receive “p<0.001”, in doing so they themselves are never aware that the true p-value is, say, p=5e-18.

Analyzing multiple Table 1 p-values with the Carlisle Stouffer Fisher test¶

Unlike other tests presented here, this is not a single method, but rather a collection of related tests and applications. However, all of them have the same focus: calculating an omnibus value from the output of multiple independent statistical tests. The most common names are:

(That last paper cites this as the ‘Carlisle-Stouffer-Fisher method’, so we’ll use that terminology; CSF)

These are variations on a theme, and all solve the same problem. Consequently, all suffer from the same central issue: a omnibus test for p-values deployed like this is designed to assess independent p-values. Say, for instance, we have a Table 1 that lists age, height, weight, and BMI. These are all mutually non-independent!

- We know people get heavier as they age

- We know taller people weigh more

- We know that BMI is a straightforward function of height and weight

If these four variables were correlated, every value in the correlation table would very likely be positive and a minimum of r~=0.3 (with some a lot higher). This could dramatically increase the likelihood of the CSF test reporting a false positive.

However.

As we saw in the STALT section, there is a place where the practical application of forensic metascience tests meets the statistical assessment of them, and the analyst is forced to use imperfect tests judiciously. In Carlisle’s original research on suspicious papers, 43 of them returned a p-value from the CSF method was less than 1e-15. Thus, while we cannot correct for the non-independence of the p-values, this is an incredibly strong signal that something is amiss, as the likelihood of even imperfect and highly multicollinear data returning this p-value is practically zero.

(It would make a good Masters project to determine exactly how much the expected Table 1 interdependency affects the use of this test on real-world data.)

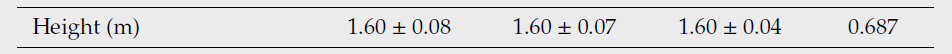

Calculation of the CSF test using Fisher’s traditional method is straightforward. To put this into context, we can use a famous retracted paper by the researcher Yoshitaka Fujii from 2005. Because the paper is heavily defaced with the RETRACTION notice, I have recreated the table below so you can see it:

Table 5:Fujii and Shiga (2005), Table 1.

25mg (n=30) | 50mg (n=30) | 75mg (n=30) | 100mg (n=30) | |

|---|---|---|---|---|

Mean age (SD) | 41(12) | 41(12) | 41(12) | 42(12) |

Sex (M/F) | 15/15 | 16/14 | 15/15 | 16/14 |

Mean height (SD) | 161(8) | 161(8) | 163(8) | 162(10) |

Mean weight (SD) | 57(10) | 58(10) | 59(9) | 59(10) |

Mean initial dosage (SD) | 29(4) | 29(5) | 30(5) | 30(5) |

This data is from a (fabricated) paper where medical outcomes while taking propofol, a powerful anesthetic, were modified by simultaneously treating patients with a second anti-inflammatory drug.

It should probably not come as a surprise that these numbers are visibly homogenous. In a situation like this, the CSF test attempts to quantify that homogeneity. As the different rows imply the use of different straightforward tests, we simply apply a reasonable test to each row.

(Note: there might be a built-in function to calculate the one-way ANOVA from summary statistics which doesn’t involve writing out all the steps! The below still works, though.)

# R code to reproduce a one-way ANOVA from summary statistics

# James Heathers, 10/24

# load the necessary library

library(stats)

# define the parameters

n <- 30

means <- c(41, 41, 41, 42)

sds <- c(12, 12, 12, 12)

k <- length(means)

# calculate the total observations

N <- n * k

# calculate the overall mean

overall_mean <- sum(means) / k

# calculate the between-group SS

SSB <- sum(n * (means - overall_mean)^2)

# calculate the within-group SS

SSW <- sum((n - 1) * sds^2)

# calculate the degrees of freedom

df_between <- k - 1

df_within <- N - k

# calculate the mean squares

MSB <- SSB / df_between

MSW <- SSW / df_within

# calculate the F-value

F_value <- MSB / MSW

# and finally, calculate the p-value

p_value <- pf(F_value, df_between, df_within, lower.tail = FALSE)

# thus, the p-value and F-value:

F_value

p_valueThis returns a very small F-value (F=0.0521) and a very large p-value (p=0.9842)! We can repeat this for all the relevant lines thus:

Table 6:The p-values from the above. * used a chi-squared test of independence

p-value | |

|---|---|

Mean age (SD) | p=0.9842 |

Sex (M/F) | p=0.9875* |

Mean height (SD) | p=0.77 |

Mean weight (SD) | p=0.8335 |

Mean initial dosage (SD) | p=0.7251 |

Now we have our p-values, we can use Stouffer’s Test which is already in the R library meta, as it has some obvious uses in traditional meta-analysis.

# R code to calculate Stouffer's Test

# James Heathers, 10/24

# grab the necessary library

library(meta)

# input p-values

p_values <- c(0.9842, 0.9875, 0.77, 0.8335, 0.7251)

# convert p-values to z-scores

z_scores <- qnorm(1 - p_values)

# Stouffer's Test

stouffer_test <- sum(z_scores) / sqrt(length(z_scores))

# calculate the omnibus p-value

combined_p_value <- 2 * (1 - pnorm(abs(stouffer_test)))In this case, we get p=0.002749.

So far, these calculations have been quite straightforward, but now we have reached the hard part: assume we saw this paper before it was retracted, and before we knew Fujii would accrue a total of 172 lifetime retractions, making him the second most fraudulent researcher in human history.

How should we make a decision about the trustworthiness of this paper if our (admittedly imperfect) test has returned a p-value of ~0.003?

The answer, unfortunately, is ‘it’s tricky’. There are some issues to consider:

- Just exactly how small is the p-value?

- Are there other errors in the paper?

- Are there similar CSF errors in papers by the same author/s?

- What are the calculated equivalent values of other studies in the same area?

We have encountered situations where:

(a) an value of this magnitude (or greater, think p=10e-15) is one amongst many incongruities in the paper, the researcher is being investigated for widespread fraud, and several studies by different authors in the same field return much more regular p-values from Stouffer’s Test…

But also where:

(b) the CSF error is moderate in magnitude (e.g. p=0.01) found in isolation, appears not too materially different from other papers in the area, and we have no suspicions about the author.